Eye Gaze in Salience Modeling for Spoken Language Processing

Motivations and Objectives

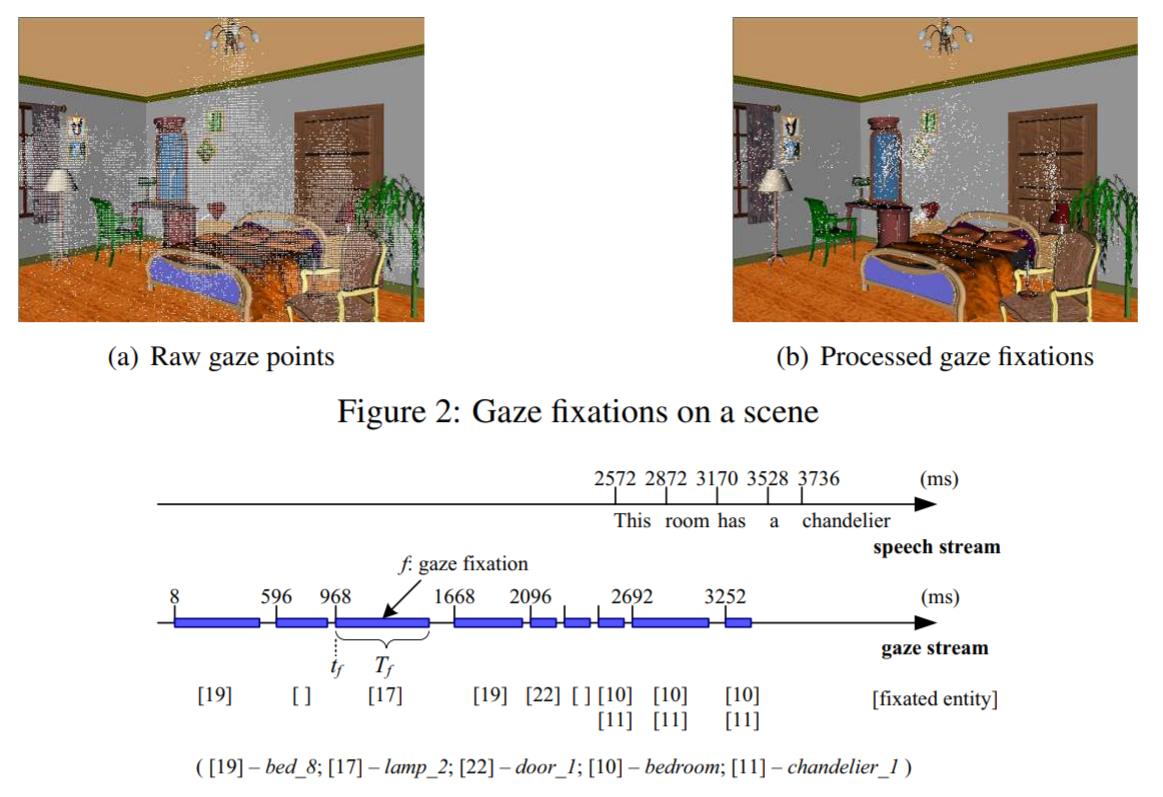

Previous psycholinguistic work has shown that eye gaze is tightly linked to human language processing. Almost immediately after hearing a word, the eyes move to the corresponding real-world referent. And right before speaking a word, the eyes also move to the mentioned object. Not only is eye gaze highly reliable, it is also an implicit, subconscious reflex of speech. The user does not need to make a conscious decision; the eye automatically moves towards the relevant object, without the user even being aware. Motivated by these psycholinguistic findings, our hypothesis is that during human machine conversation, user eye gaze information coupled with conversation context can signal a part of the physical world (related to the domain and the graphic interface) that is most salient at each point of communication. This salience in the physical world will in turn prime what users communicate to the system, and thus can be used to tailor the interpretation of speech input. Based on this hypothesis, this project examines the role of eye gaze in human language production during human machine conversation and develops algorithms and systems that incorporates gaze-based salience modeling to robust spoken language understanding. Supported by NSF (Co-PI: Fernanda Ferreira, University of Edinburgh).

Related Papers

- Eye Gaze for Reference Resolution in Multimodal Conversational Interfaces. Z. Prasov, Ph.D. Dissertation, 2011.

- Eye Gaze with Speech Recognition Hypotheses to Resolve Exophoric References in Situated Dialogue. Z. Prasov and J. Y. Chai. Conference on Empirical Methods in Natural Language Processing (EMNLP). MIT, MA. October 2010.

- Context-based Word Acquisition for Situated Dialogue in a Virtual World. S. Qu and J. Y. Chai. Journal of Artificial Intelligence Research, Volume 37, pp.347-377, March 2010.

- Between Linguistic Attention and Gaze Fixations in Multimodal Conversational Interfaces . R. Fang, J. Y. Chai, and F. Ferreira. The 11th International Conference on Multimodal Interfaces (ICMI). Cambridge, MA, USA, November 2-6, 2009.

- The Role of Interactivity in Human Machine Conversation for Automated Word Acquisition. S. Qu and J. Y. Chai. The 10th Annual SIGDIAL Meeting on Discourse and Dialogue, London, UK, September, 2009.

- Incorporating Temporal and Semantic Information with Eye Gaze for Automatic Word Acquisition in Multimodal Conversational Systems . S. Qu and J. Y. Chai. Proceedings of the 2008 Conference on Empirical Methods in Natural Language Processing (EMNLP). Honolulu, October 2008.

- What’s in a Gaze? The Role of Eye-Gaze in Reference Resolution in Multimodal Conversational Interfaces. Z. Prasov and J. Y. Chai. ACM 12th International Conference on Intelligent User interfaces (IUI). Canary Islands, Jan 13-17, 2008.

- Automated Vocabulary Acquisition and Interpretation in Multimodal Conversational Systems. Y. Liu, J. Y. Chai, and R. Jin. The 45th Annual Meeting of the Association of Computational Linguistics (ACL). Prague, Czech Republic, June 23-30, 2007.

- An Exploration of Eye Gaze in Spoken Language Processing for Multimodal Conversational Interfaces. S. Qu and J. Y. Chai. 2007 Meeting of the North American Chapter of the Association of Computational Linguistics (NAACL-07). Rochester NY, April, 2007.

- Eye Gaze for Attention Prediction in Multimodal Human Machine Conversation. Z. Prasov, J. Y. Chai, and H. Jeong. The AAAI 2007 Spring Symposium on Interaction Challenges for Artificial Assistants. Palo Alto, CA. March 2007.