Multimodal Learning for Situated Language Understanding

Motivations and Objectives

Using situated dialogue (in the virtual world) and conversational interfaces as our setting, we have investigated the use of non-verbal modalities (e.g., eye gaze and deictic gestures) in language processing and in conversation grounding. The virtual world setting not only has important applications in education, training, and entertainment; but also provides a simplified simulation environment to support studies on situated language processing toward physical world interaction.

Selected Recent Papers

Language & 3D Vision

- Jianing Yang, Alexander Sax, Kevin J. Liang, Mikael Henaff, Hao Tang, Ang Cao, Joyce Chai, Franziska Meier, Matt Feiszli. Fast3R: Towards 3D Reconstruction of 1000+ Images in One Forward Pass. Preprint, 2025.

- Jianing Yang, Xuweiyi Chen, Nikhil Madaan, Madhavan Iyengar, Shengyi Qian, David F. Fouhey, Joyce Chai. 3D-GRAND: A Million-Scale Dataset for 3D-LLMs with Better Grounding and Less Hallucination. Preprint, 2024.

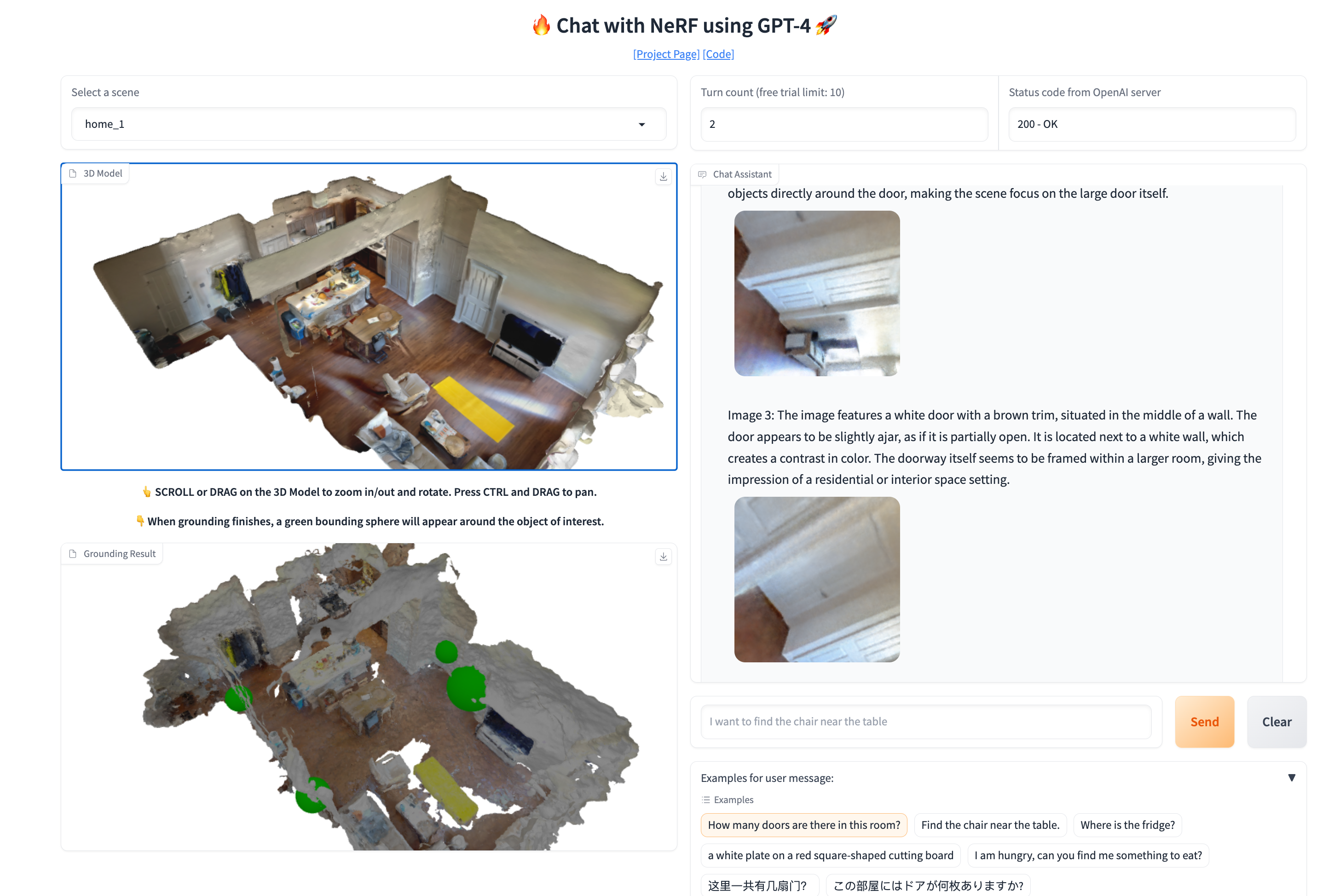

- Jianing Yang, Xuweiyi Chen, Shengyi Qian, Nikhil Madaan, Madhavan Iyengar, David Fouhey, Joyce Chai. LLM-Grounder: Open-Vocabulary 3D Visual Grounding with Large Language Model as an Agent. ICRA, 2024.

- Yichi Zhang, Jianing Yang, Jiayi Pan, Shane Storks, Nikhil Devraj, Ziqiao Ma, Keunwoo Peter Yu, Yuwei Bao, Joyce Chai. DANLI: Deliberative Agent for Following Natural Language Instructions. EMNLP, 2022.

Language & 2D Vision

- Zheyuan Zhang, Fengyuan Hu, Jayjun Lee, Freda Shi, Parisa Kordjamshidi, Joyce Chai, Ziqiao Ma. Do Vision-Language Models Represent Space and How? Evaluating Spatial Frame of Reference Under Ambiguities. ICLR, 2025. (

Oral ) - Xuweiyi Chen, Ziqiao Ma, Xuejun Zhang, Sihan Xu, Shengyi Qian, Jianing Yang, David F. Fouhey, Joyce Chai. Multi-Object Hallucination in Vision-Language Models. NeurIPS, 2024.

- Sihan Xu, Yidong Huang, Jiayi Pan, Ziqiao Ma, Joyce Chai. Inversion-Free Image Editing with Natural Language. CVPR, 2024.

- Yichi Zhang, Ziqiao Ma, Xiaofeng Gao, Suhaila Shakiah, Qiaozi Gao, Joyce Chai. GROUNDHOG: Grounding Large Language Models to Holistic Segmentation. CVPR, 2024.

- Yichi Zhang, Jiayi Pan, Yuchen Zhou, Rui Pan, Joyce Chai. Grounding Visual Illusions in Language: Do Vision-Language Models Perceive Illusions Like Humans?. EMNLP, 2023.

- Sihan Xu, Ziqiao Ma, Yidong Huang, Honglak Lee, Joyce Chai. CycleNet: Rethinking Cycle Consistent in Text‑Guided Diffusion for Image Manipulation. NeurIPS, 2023.

- Ziqiao Ma, Jiayi Pan, Joyce Chai. World-to-Words: Grounded Open Vocabulary Acquisition through Fast Mapping in Vision-Language Models. ACL, 2023. (

Outstanding Paper Award )

Language & Eye Gaze

- Zahar Prasov and Joyce Chai. Fusing Eye Gaze with Speech Recognition Hypotheses to Resolve Exophoric References in Situated Dialogue. EMNLP, 2010

- Shaolin Qu and Joyce Chai. Incorporating Temporal and Semantic Information with Eye Gaze for Automatic Word Acquisition in Multimodal Conversational Systems. EMNLP, 2008.

- Shaolin Qu and Joyce Chai. An Exploration of Eye Gaze in Spoken Language Processing for Multimodal Conversational Interfaces. NAACL, 2007.