Interactive Task Learning for Robotics and Embodied Dialogue Agents

Motivations and Objectives

We envision that the forthcoming generation of artificial intelligence (AI) will adopt an embodied paradigm: one that enables AI agents to operate in the physical world, interpret and process multimodal inputs, learn from situated communication with humans, and collaborate with humans on complex tasks. The potential impact of embodied AI is tremendous, spanning from robots that serve as waiters in restaurants and assist elderly individuals to complete household chores, to the aspiration of artificial general intelligence.

Our thoughts and positions:

- Yue Zhang, Ziqiao Ma, Jialu Li, Yanyuan Qiao, Zun Wang, Joyce Chai, Qi Wu, Mohit Bansal, Parisa Kordjamshidi. Vision-and-Language Navigation Today and Tomorrow: A Survey in the Era of Foundation Models. TMLR, 2024.

- Joyce Chai, Qiaozi Gao, Lanbo She, Shaohua Yang, Sari Saba-Sadiya, Guangyue Xu. Language to Action: Towards Interactive Task Learning with Physical Agents. IJCAI (Invited Paper), 2018.

Selected Recent Papers

Embodied Dialogue Agents for Instruction Following

- Yinpei Dai, Jayjun Lee, Nima Fazeli, Joyce Chai. RACER: Rich Language-Guided Failure Recovery Policies for Imitation Learning. ICRA, 2025.

- Jiajun Xi, Yinong He, Jianing Yang, Yinpei Dai, Joyce Chai. Teaching Embodied Reinforcement Learning Agents: Informativeness and Diversity of Language Use. EMNLP, 2024

- Yinpei Dai, Run Peng, Sikai Li, Joyce Chai. Think, Act, and Ask: Open-World Interactive Personalized Robot Navigation. ICRA, 2024.

- Yidong Huang, Jacob Sansom, Ziqiao Ma, Felix Gervits, Joyce Chai. DriVLMe: Enhancing LLM-based Autonomous Driving Agents with Embodied and Social Experiences. IROS, 2024.

- Yichi Zhang, Jianing Yang, Keunwoo Peter Yu, Yinpei Dai, Shane Storks, Yuwei Bao, Jiayi Pan, Nikhil Devraj, Ziqiao Ma, Joyce Chai. SEAGULL: An Embodied Agent for Instruction Following through Situated Dialog. Alexa Prize SimBot Challenge Proceedings (First Prize), 2023.

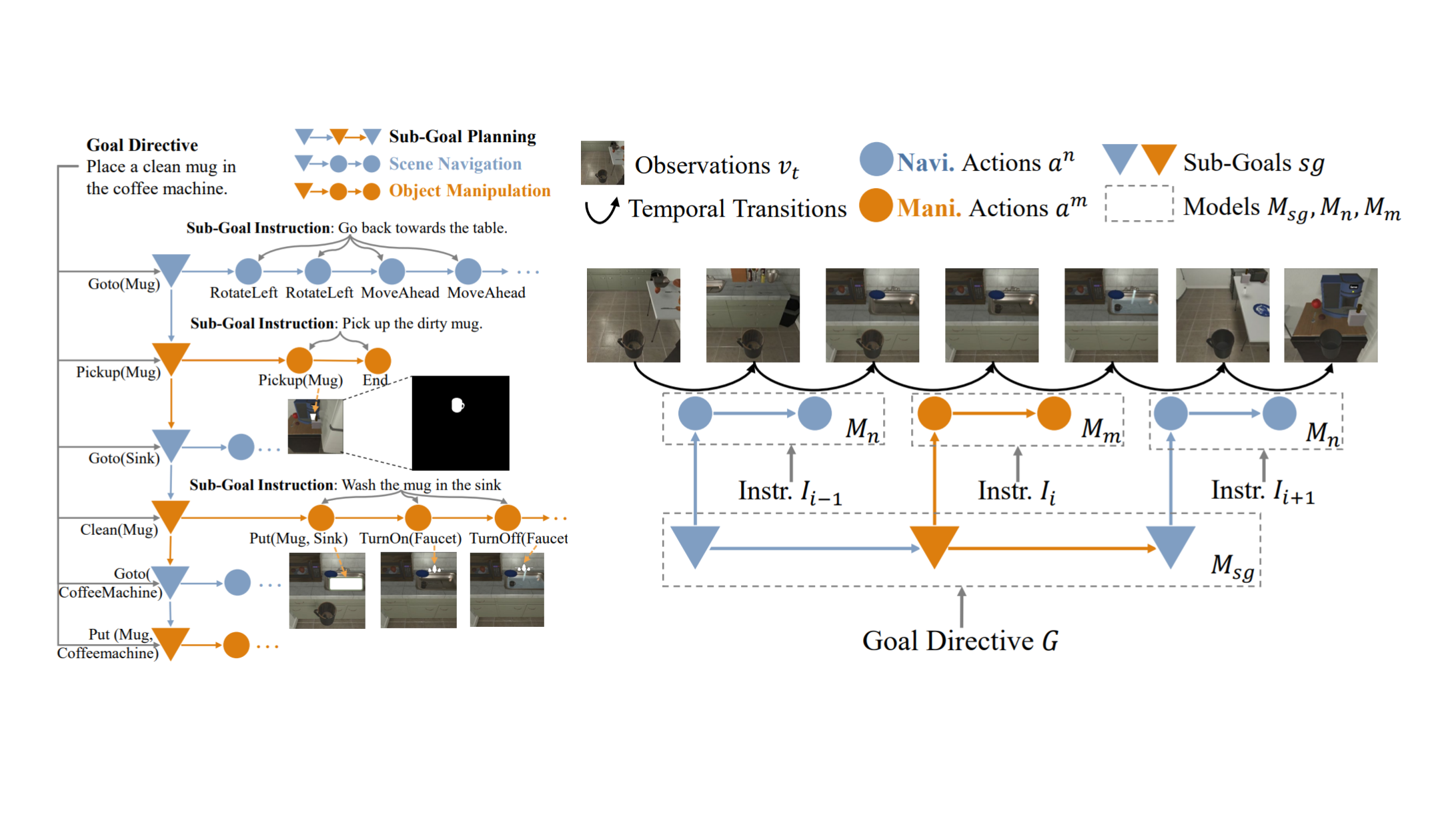

- Yichi Zhang, Jianing Yang, Jiayi Pan, Shane Storks, Nikhil Devraj, Ziqiao Ma, Keunwoo Peter Yu, Yuwei Bao, Joyce Chai. DANLI: Deliberative Agent for Following Natural Language Instructions. EMNLP, 2022.

- Ziqiao Ma, Ben VanDerPloeg, Cristian-Paul Bara, Yidong Huang, Eui-In Kim, Felix Gervits, Matthew Marge, Joyce Chai. DOROTHIE: Spoken Dialogue for Handling Unexpected Situations in Interactive Autonomous Driving Agents. EMNLP Findings, 2022.

- Yichi Zhang, Joyce Chai. Hierarchical Task Learning from Language Instructions with Unified Transformers and Self-Monitoring. ACL Findings, 2021.

Interactive Task Learning from Situated Dialogue

- Shane Storks, Itamar Bar-Yossef, Yayuan Li, Zheyuan Zhang, Jason J. Corso, Joyce Chai. Explainable Procedural Mistake Detection. Preprint, 2024.

- Yuwei Bao, Keunwoo Yu, Yichi Zhang, Shane Storks, Itamar Bar-Yossef, Alexander De La Iglesia, Megan Su, Xiao Lin Zheng, Joyce Chai. Can Foundation Models Watch, Talk and Guide You Step by Step to Make a Cake?. EMNLP Findings, 2023.

- Lanbo She and Joyce Chai. Interactive Learning of Grounded Verb Semantics towards Human-Robot Communication. ACL, 2017.

- Changsong Liu, Shaohua Yang, Sari Sadiya, Nishan Shukla, Y. He, Song-Chun Zhu, and Joyce Chai. Jointly Learning Grounded Task Structures from Language Instruction and Visual Demonstration. EMNLP, 2016.

- Lanbo She and Joyce Chai. Incremental Acquisition of Verb Hypothesis Space towards Physical World Interaction. ACL, 2016.

- Lanbo She, Shaohua Yang, Yu Cheng, Yunyi Jia, Joyce Chai, and Ning Xi. Back to the Blocks World: Learning New Actions through Situated Human-Robot Dialogue. SIGDIAL, 2014.